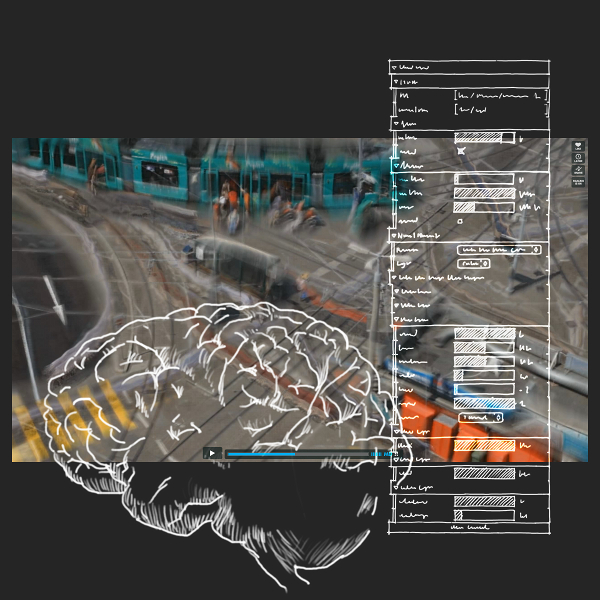

Urban Chromatography

Mediacities 2013 Workshop

Generative Video Processing with Neural Networks

Abstract

NeuroVision is a web-based sandbox for Generative Video Processing in the browser.

It is used to transform live-video from mobile devices and webcams, based on colors, flows and rhythms.

We will learn how to process video feeds and web videos with Neural Networks and use NeuroVision as an artistic tool for our own video-recordings.

The Neurovison Sandbox

Sandboxes are an important tool for teaching creative coding practices to artists:

Sketchpad.cc is a popular sandbox based on Processing that lets students explore computation visually and collaboratively. An other sandbox that is more centered on parallel programming enabled by GPUs is Pixelshaders.com.

With NeuroVision we introduce a sandbox for GPU-based video processing.

We have used this sandbox in our own work, including Transits1 and the Neural Chromatographic Orchestra2 and would now like to share it and make it available as a tool for artists that want to use live-video-processing in their work.

Sign Up

You can now sign up for the workshop!

Once you have submitted your Mediacities Registration,

you can visit the Sign Up Page to register for this workshop.

You better be quick, since the size of the workshop is limited.

Registration Fee

The workshop is included in the conference registration.

Students can attend the complete conference for 50 USD.

Regular Visitors can obtain day passes for 50 USD per day, or attend thecomplete conference for 150 USD.

Check out the Registration Page for more info.

Topics

| Neural Networks | Computational Chromatography | OpenGL Shading Language | Video City |

|---|---|---|---|

| Introduction to NN | Coding Colors | Introduction to GLSL | Urban Colors |

| The Neuron as Computational Unit | Optical Flow | Video Filters | Urban Motion |

| Layers, Memory and Feedback | Color Attraction | Video Transformations | Urban Rhythms |

Workshop Details

The workshop is limited to 30 participants.

It has been spread over Friday and Saturday, to avoid collisions with the keynote talks.

Friday

| Time | Topic |

|---|---|

| 10:00 – 11:00 | Intro to the Neurovision Sandbox |

| 11:00 – 12:00 | Intro to Neural Networks |

| 1:45 – 03:00 | Intro to Chromatography |

| 3:15 – 04:15 | Hands-On Coding - Part I |

| 4:15 – 05:30 | Hands-On Coding - Part II |

Saturday

| Time | Topic |

|---|---|

| 1:15 – 3:30 | Field Trip with Ursula Damm |

| 5:00 – 6:00 | Hands-On Coding - Part III |

Sunday

| Time | Topic |

|---|---|

| 3:45 – 4:30 | Workshop Presentation |

Martin Schneider will lead you through the framework and Ursula Damm will take you on a field trip.

Hands-on coding sessions will be held in small groups with assistants to help you.

On Sunday all workshop participants can show their results in a Pecha Kucha style presentation.

Skill-Level

You will be using the WebGL Variant of GLSL inside the NeuroVision Sandbox.

No special skills are required, however some coding literacy is recommended.

If you already know a programming language such as Java, C or Processing you are on the save side.

The NeuroVision Sandbox is similar to Mr Doob’s GLSL Sandbox, Toby Schachmann’s Shader Editor and the Shader Toy by Iñigo Quilez. Playing around with those tools is an excellent preparation for our workshop.

If you want to dig deeper:

The excellent GLSL tutorial by TyphoonLabs and the WebGL specs are good way to get you started.

Equipment

For this workshop you need to bring your own laptop.

It should be equipped with a GPU and a WebGL capable browser.

(Try http://get.webgl.org/ if you are not sure)

We highly recommend a laptop with a built-in webcam, or a webcam that can be attached to the top of your screen. This will allow you to live-code chromatographic effects (Expect to be waving your hands at your laptop a lot while coding)

As part of the workshop, we will go on a field trip, where we will record our own footage of urban motion. If you have a mobile device with a video camera, bring it along!